The Poetry of Code - Part 2: Addressing the Fear

This is Part 2 of “The Poetry of Code” series. Part 1: Building Your Digital Self covered the co-pilot mindset and how I use AI tools as extensions of myself. This piece addresses the real objections head-on.

In Part 1, I shared my journey from AI skeptic to daily user. I explained how I use Kiro, Claude, and Copilot as extensions of myself rather than replacements for my thinking.

But I glossed over something important: the objections.

The resistance I encountered in that meeting wasn’t irrational. The concerns were real. And they deserve serious engagement, not dismissal.

Let me take them one at a time.

”AI Can’t Be Trusted to Produce Quality”

This is true if you use AI as a slot machine. Put in a prompt, accept whatever comes out, ship it without review. That produces garbage.

It’s false if you use AI as a collaborator. The output is a draft, not a final product. You review it. You refine it. You apply your judgment to determine what’s good and what needs work. The quality comes from the collaboration, not from blind acceptance.

I don’t trust AI to produce quality. I trust myself to evaluate and improve what AI produces. That’s the difference.

The practitioners who complain about AI quality are often the ones who expected magic. They wanted to describe a problem and receive a perfect solution. When that didn’t happen, they concluded the tools don’t work.

But that’s not how any tool works. A hammer doesn’t build a house. A skilled carpenter with a hammer builds a house. The tool amplifies capability. It doesn’t replace skill.

AI amplifies your ability to produce. The quality still depends on you.

”If You Use AI to Create, How Can It Be Supported?”

This assumes the person using AI doesn’t understand what they created. That’s only true if you’re copy-pasting without comprehension.

When I build something with Kiro, I understand what was built. I directed the architecture. I reviewed the implementation. I made decisions about structure and approach. The AI accelerated the creation, but the understanding is mine.

If anything, AI-assisted creation can improve supportability. The tools enforce patterns. They generate documentation. They catch inconsistencies. A codebase built with AI assistance, by someone who understands what they’re building, is often more consistent than one built entirely by hand.

The supportability concern is really a competence concern in disguise. And it’s valid, if AI tools are used by people who don’t understand what they’re building. But that’s an argument for using AI tools thoughtfully, not an argument against using them at all.

”AI Is Replacing Humans”

This fear is legitimate but misdirected. And it’s worth unpacking, because there’s something deeper happening here.

I’m not a developer. I need to be clear about that. I’ve worked alongside developers for over a decade. I know rudimentary scripting, the importance of code comments, general DevOps principles. But I’m not a software developer. I’m an architect. My craft is different.

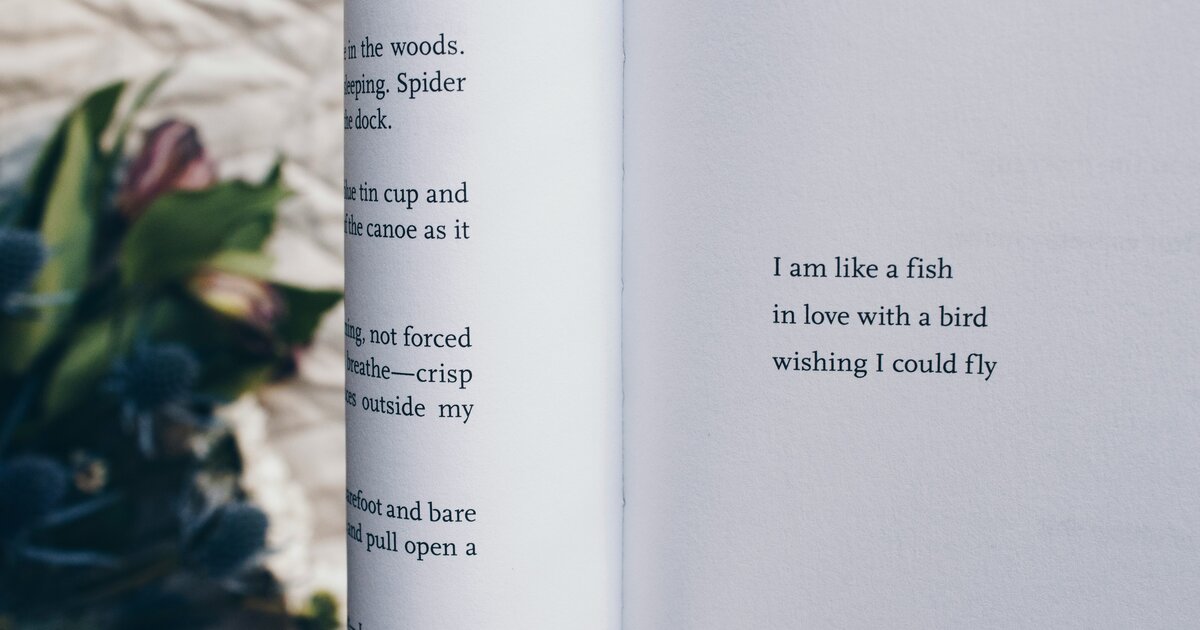

In that meeting, one of the concerns came from a developer who’s spent their career building and coding. Someone who has invested years mastering their craft. We called it “the poetry of code.” There’s a tendency among skilled developers to value the act of software development itself. The elegance of the design. The cleverness of the solution. The craft of writing beautiful, efficient code.

I understand that more than they might expect. Because I have my own version of it.

My poetry isn’t code. It’s architectural design. The infrastructure representation of solving complex business problems. The layer that developers build on top of. I’ve spent years learning to see systems holistically, to translate business needs into technical foundations, to design environments that scale and adapt. That’s my craft. That’s what I took pride in.

And I’ve had to work through the same identity shift they’re facing.

This was the uncomfortable part for me: the mechanics I spent years mastering are no longer the bottleneck. Someone with vision and business knowledge, paired with these tools, can produce functional work faster than I ever could manually. Not because my experience doesn’t matter. But because the tools have shifted where experience matters most.

If someone has the vision, the business knowledge, and the ability to have a conversation with themselves about what they’re trying to accomplish, they can produce architectural designs that are functional and sound. The mechanics, the syntax of infrastructure as code, the implementation details, the AI handles that. What matters now is direction, judgment, and clarity about outcomes.

This feels threatening if your identity is wrapped up in the craft itself. If the poetry was the point, then tools that write the poetry feel like an attack on your value.

I’ve felt that. It’s real.

But I’ve also reframed it: what becomes possible when a senior architect or developer with decades of experience embraces these tools?

I can barely imagine it. It’s almost limitless.

Think about what that senior practitioner actually has. Deep pattern recognition from years of seeing systems succeed and fail. Intuition about where complexity hides and where shortcuts create debt. Understanding of edge cases that only comes from having been burned by them. Judgment developed over hundreds of projects.

None of that goes away with AI. All of it becomes more powerful.

The senior practitioner who overcomes their attachment to the craft and reimagines what’s important can produce at a level that wasn’t possible before. Their vision, accelerated by tools that handle implementation. Their judgment, applied to output generated in hours instead of weeks. Their experience, leveraged across more projects than they could have touched when every artifact required their direct attention.

The challenge now isn’t learning to code .NET or C++, or mastering the intricacies of cloud infrastructure. The challenge is getting skilled practitioners to overcome their sense of self and reframe what’s actually valuable. The end product matters. The outcome matters. The craft of manually creating every artifact that produces that outcome is becoming less central, not more.

The question isn’t whether AI will affect your job. It will. The question is whether you’ll be the person who knows how to direct these tools effectively, or the person who refused to learn while others did.

I’d rather be indispensable because I amplified my capabilities than replaceable because I didn’t.

The Hammer in Your Hand

Here’s something important about augmentation: these tools augment whatever you bring to them.

They augment your strengths. They also augment your weaknesses. They accelerate your search for knowledge. They can also accelerate your bad habits if you let them. The tool doesn’t make those choices. You do.

Think about a hammer. It’s a tool for driving nails. But there are various sizes of hammers for various jobs. And hammers aren’t the only tool that drives nails. Nail guns exist. So do screws and screwdrivers for different applications entirely. The tool doesn’t tell you which fastener to use or where to place it. Your judgment does. The tool just executes faster than your bare hands could.

AI is the same. It doesn’t replace your judgment. It executes on your judgment faster than you could alone. If your judgment is sound, the output is better. If your judgment is flawed, the output reflects that too.

These tools can’t do everything. Not yet. Maybe not ever. They don’t replace me. They augment me. And what they augment depends entirely on what I bring to the collaboration.

There’s an evolution happening right now. You can fear it, or you can understand it. You can wait until the landscape shifts beneath you, or you can get ahead of where it’s going. The practitioners who engage now, who develop fluency while the tools are still maturing, won’t be caught off guard when the next wave arrives. They’ll be ready.

Start Somewhere

If you’re reading this as someone who hasn’t seriously tried AI tools yet, here’s my challenge: pick one task this week and use AI to help.

Not a critical task. Not something high-stakes. Pick something you were going to do anyway, and see if AI can accelerate it.

Draft an email. Outline a document. Debug a piece of code. Organize your notes from a meeting. Something small, something low-risk, something where you can evaluate the output against what you would have produced alone.

Then pay attention to what happens. Where did the AI help? Where did it miss? Where did you have to apply judgment to improve what it produced?

That evaluation process is the skill. The more you practice it, the better you get at directing these tools effectively. The skeptics who never try never develop that skill. The practitioners who experiment deliberately become more capable over time.

The Tools Will Only Get Better

One more thing to consider: these tools are in their infancy.

What AI can do today is remarkable. What it will do in two years, five years, ten years will make today’s capabilities look primitive. The trajectory is clear even if the specifics are uncertain.

The practitioners who build fluency with AI tools now will be positioned to leverage improvements as they come. The practitioners who resist will find themselves increasingly behind, trying to catch up to colleagues who’ve been developing these skills for years.

I don’t say this to create fear. I say it because it’s the reality of the landscape. The assembly line didn’t go away because some craftsmen refused to adapt. It became the standard, and the craftsmen who adapted thrived while those who didn’t found themselves marginalized.

AI-assisted work is becoming the standard. The question is whether you’ll be fluent or playing catch-up.

The Extension of You

I can’t work without these tools now. Not because I’m dependent on them in some unhealthy way. Because they’ve become extensions of how I think and create.

When I sit down to write, Claude is there to help me organize my thoughts. When I sit down to build, Kiro is there to help me implement my ideas. When I need to process complexity, AI helps me move faster without sacrificing quality.

These are tools. Like any tools, they require skill to use well. Like any tools, they amplify capability rather than replacing it. Like any tools, they’re only as good as the person directing them.

The resistance I encountered in that meeting comes from fear of the unknown. That fear is understandable. But it’s not a reason to avoid learning.

Give the tools a serious try. Direct them deliberately. Apply your judgment to their output. See what becomes possible when you stop treating AI as a threat and start treating it as an extension of yourself.

You might find, like I did, that the skepticism fades quickly once you experience what’s actually possible.

The poetry of code isn’t in the syntax. It’s in the vision. And these tools let you express that vision faster than ever before.

For a practical walkthrough of setting up AI-assisted development, see my post on configuring Kiro.

Photo by Nicolas Hoizey on Unsplash