AI Observability

A request that completes in 800ms, uses 1,200 tokens, and passes content filters might still produce a response that's confidently wrong. That gap is where most AI observability stops and most AI value leaks away.

Multi-part frameworks and deep dives. These aren't just related posts—they're structured bodies of work building toward complete understanding.

A request that completes in 800ms, uses 1,200 tokens, and passes content filters might still produce a response that's confidently wrong. That gap is where most AI observability stops and most AI value leaks away.

Stop asking if you can trust AI. Start asking what would give you confidence in systems you've built, observed, and refined.

SRE failed outside Google because the promise couldn't travel. Here's a promise that can.

Your engineers are drowning in decisions that don't matter. The chaos isn't a failure of governance. It's a failure of leadership.

TThe technical skills are table stakes. The real job is translation.

Azure Monitor isn't broken. Your implementation of it is. Here's how to build monitoring that produces signal instead of noise.

The platform is what holds everything together. When it's solid, teams build confidently. When it's shaky, everything falls apart slowly.

Azure Monitor Logs is quite possibly one of the most powerful log aggregation platforms out there. The hardest part isn't the syntax. It's understanding the data you're working with.

Your dashboards are green. Your customers aren't complaining. And you might still be blind to 90% of what's actually happening.

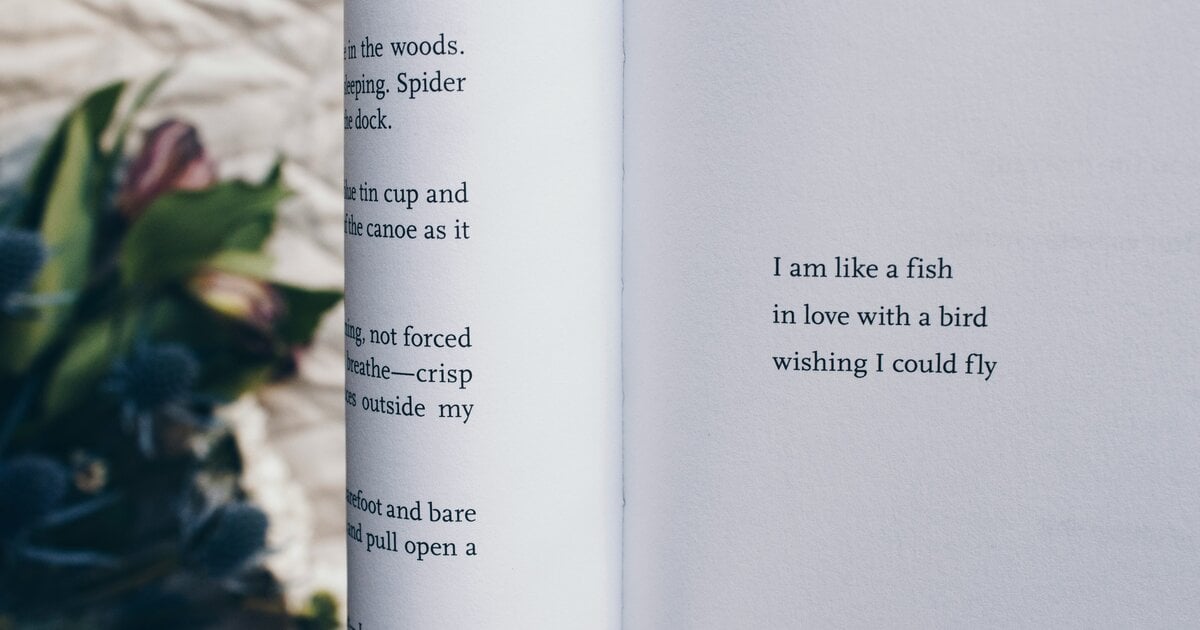

The poetry isn't in the syntax. It's in the vision. These tools let you express that vision faster than ever before.